I have been having a lot of video chat conversations recently with a colleague who is on the opposite side of the continent.

Now that we have been “seeing” each other on a weekly basis, we have become very familiar with each other’s voices, facial expressions, gesticulations, and so on.

But, as is common with any video conferencing system: the audio and video signal is unpredictable. Often the video signal totally freezes up, or it lags behind the voice. It can be really distracting when the facial expressions and mouth movements do not match the sound I’m hearing.

Sometimes we prefer to just turn off the video and stick with voice.

One day after turning off the video, I came to the realization that I have become so familiar with his body language that I can pretty much guess what I would be seeing as he spoke. Basically, I realized that…

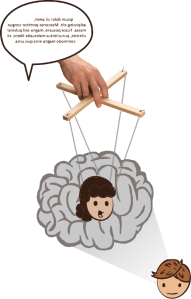

HIS VOICE WAS PUPPETEERING HIS VIRTUAL SELF IN MY BRAIN.

Since the voice of my colleague is normally synchronized with his physical gesticulations, facial expressions, and body motions, I can easily imagine the visual counterpart to his voice.

This is not new to video chat. It has been happening for a long time with telephone, when we speak with someone we know intimately.

In fact, it may have even happened at the dawn of our species.

According to gestural theory, physical, visible gesture was once the primary communication modality in our ape ancestors. Then, our ancestors began using their hands increasingly for tool manipulation—and this created evolutionary pressure for vocal sounds to take over as the primary language delivery method. The result is that we humans can walk, use tools, and talk, all at the same time.

As gestures gave way to audible language, our ancestors could keep looking for nuts and berries while their companions were yacking on.

Here’s the point: The entire progression from gesture to voice remains as a vestigial pathway in our brains. And this is why I so easily imagine my friend gesturing at me as I listen to his voice.

Homunculi and Mirror Neurons

There are many complex structures in my brain, including several body maps that represent the positions, movements and sensations within my physical body. There are also mirror neurons – which help me to relate to and sympathize with other people. There are neural structures that cause me to recognize faces, walking gaits, and voices of people I know.

Evolutionary biology and neuroscience research points to the possibility that language may have evolved out of, and in tandem with gestural communication in homo sapiens. Even as audible language was freed from the physicality of gesture, the sound of one’s voice remains naturally associated with the visual, physical energy of the source of that voice (for more on this line of reasoning, check out Terrance Deacon).

Puppeteering is the art of making something come to life, whether with strings (as in a marionette), or with your hand (as in a muppet). The greatest puppeteers know how to make the most expressive movement with the fewest strings.

Puppeteering is the art of making something come to life, whether with strings (as in a marionette), or with your hand (as in a muppet). The greatest puppeteers know how to make the most expressive movement with the fewest strings.

The same principle applies when I am having a Skype call with my wife. I am so intimately familiar with her voice and the associated visual counterpart, that all it takes is a few puppet strings for her to appear and begin animating in my mind – often triggered by a tiny, scratchy voice in a cell phone.

Enough pattern-recognition material has accumulated in my brain to do most of the work.

I am fascinated with the processes that go on in our brains that allow us to build such useful and reliable inner-representations of each other. And I have wondered if we could use more biomimicry – to apply more of these natural processes towards the goal of transmitting body language and voice across the internet.

These ideas are explored in depth in Voice as Puppeteer.

Posted by Jeffrey Ventrella

Posted by Jeffrey Ventrella

(This blog post is re-published from an earlier blog of mine called “avatar puppetry” – the nonverbal internet. I’ll be phasing out that earlier blog, so I’m migrating a few of those earlier posts here before I trash it).

(This blog post is re-published from an earlier blog of mine called “avatar puppetry” – the nonverbal internet. I’ll be phasing out that earlier blog, so I’m migrating a few of those earlier posts here before I trash it).